OPER

Configurations

Three sloop-hycom3d configurations are operated by the Meteo-France services.

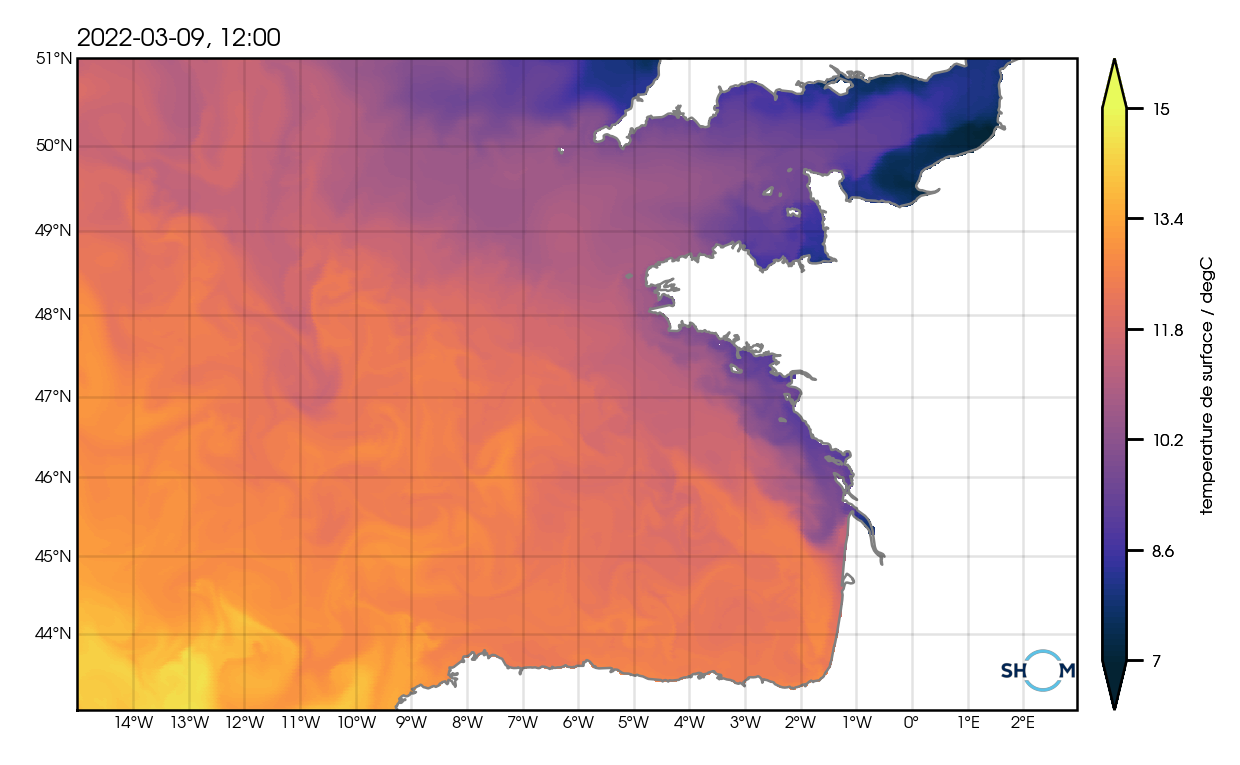

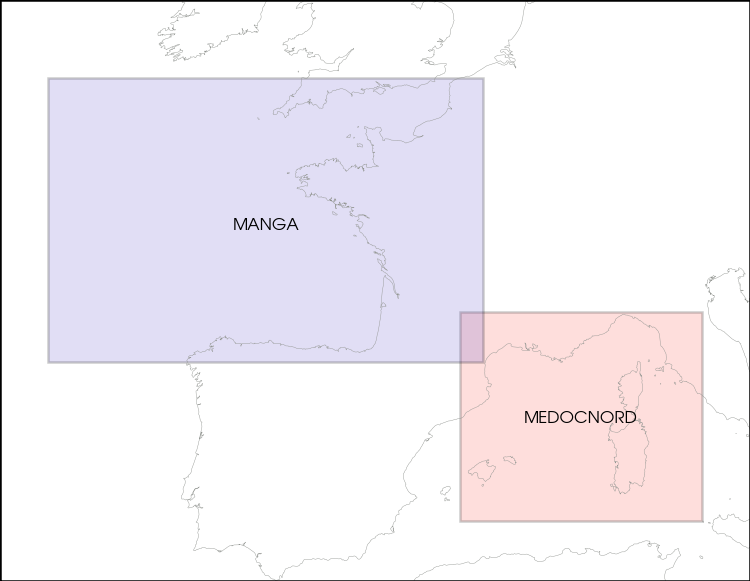

Hycom3d-Manga

area : English channel and Gulf of Biscay

horizontal resolution : 1/40°

vertical resolution : 40 levels

atmospheric fluxes: arpege eurat01 (Meteo-France)

open boundaries and spectral nudging: psy4 (Mercator Ocean International)

rivers inflow: real time observations (CMEMS)

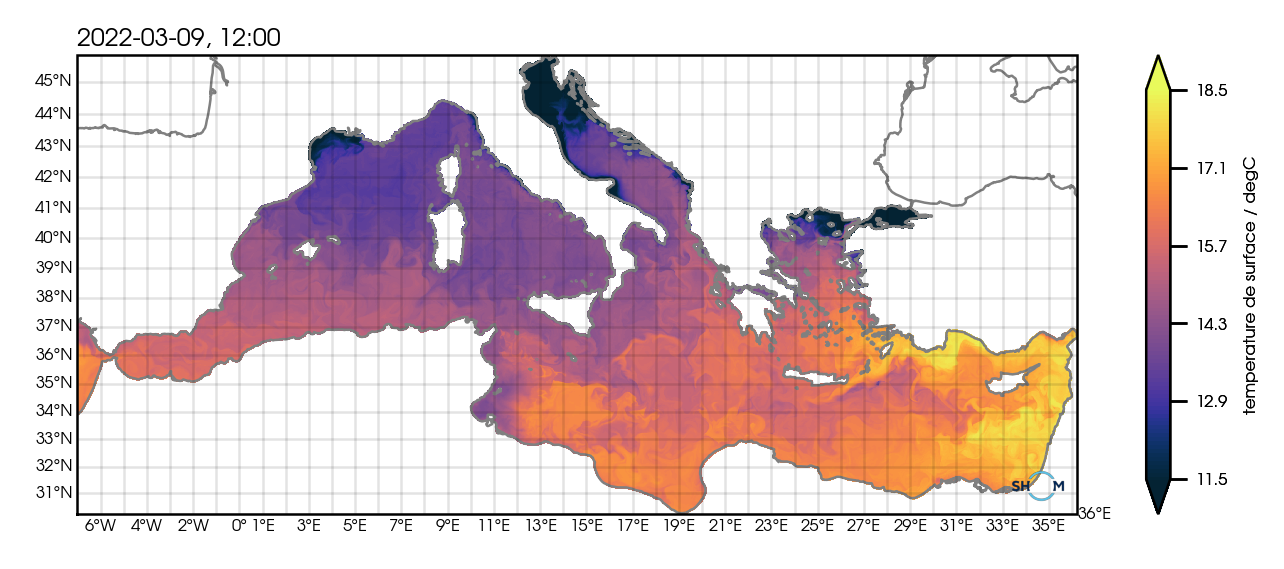

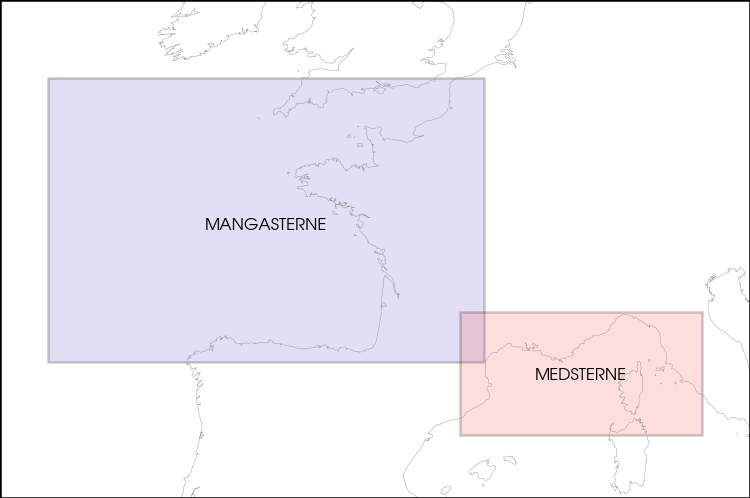

Hycom3d-Med

area : Mediterranean Sea

horizontal resolution : 1/60°

vertical resolution : 32 levels

atmospheric fluxes: arpege eurat01 (Meteo-France)

open boundaries and spectral nudging: psy4 (Mercator Ocean International)

rivers inflow: climatology + real time observations (CMEMS)

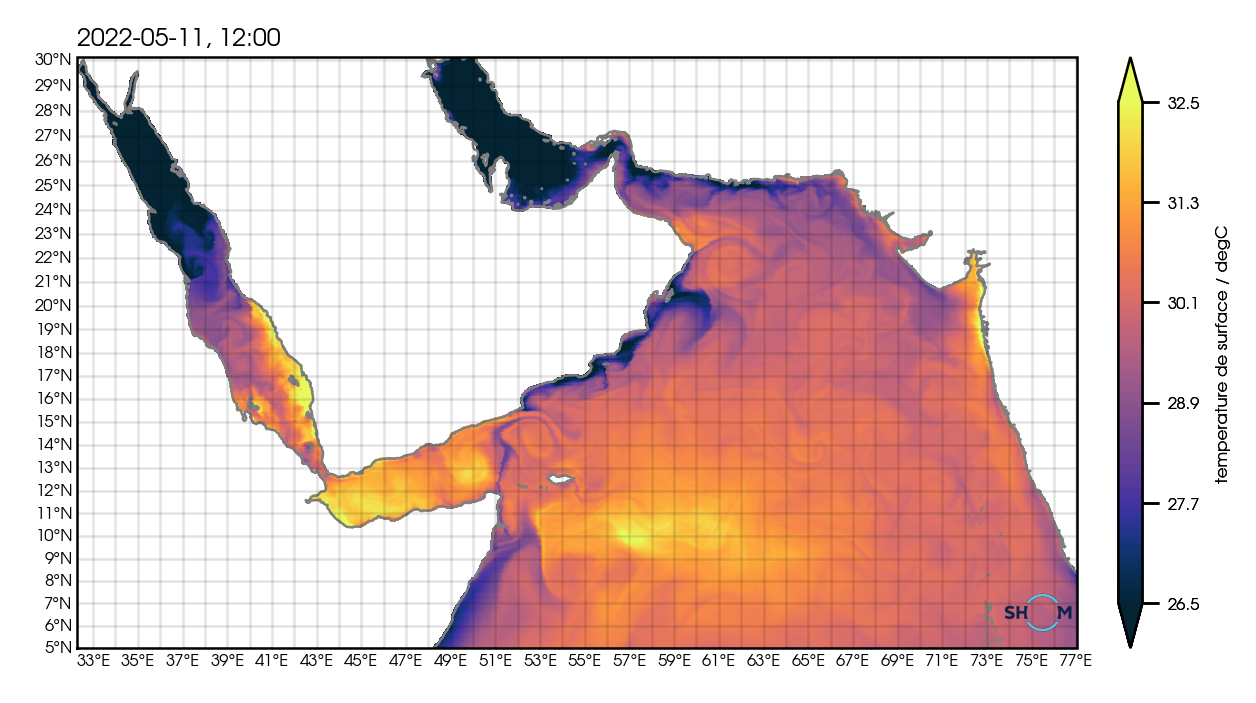

Hycom3d-Indien

area : Arabian Sea, Red Sea and Persian Gulf

horizontal resolution : 1/40°

vertical resolution : 40 levels

atmospheric fluxes: arpege glob025 (Meteo-France)

open boundaries and spectral nudging: psy4 (Mercator Ocean International)

rivers inflow: climatology

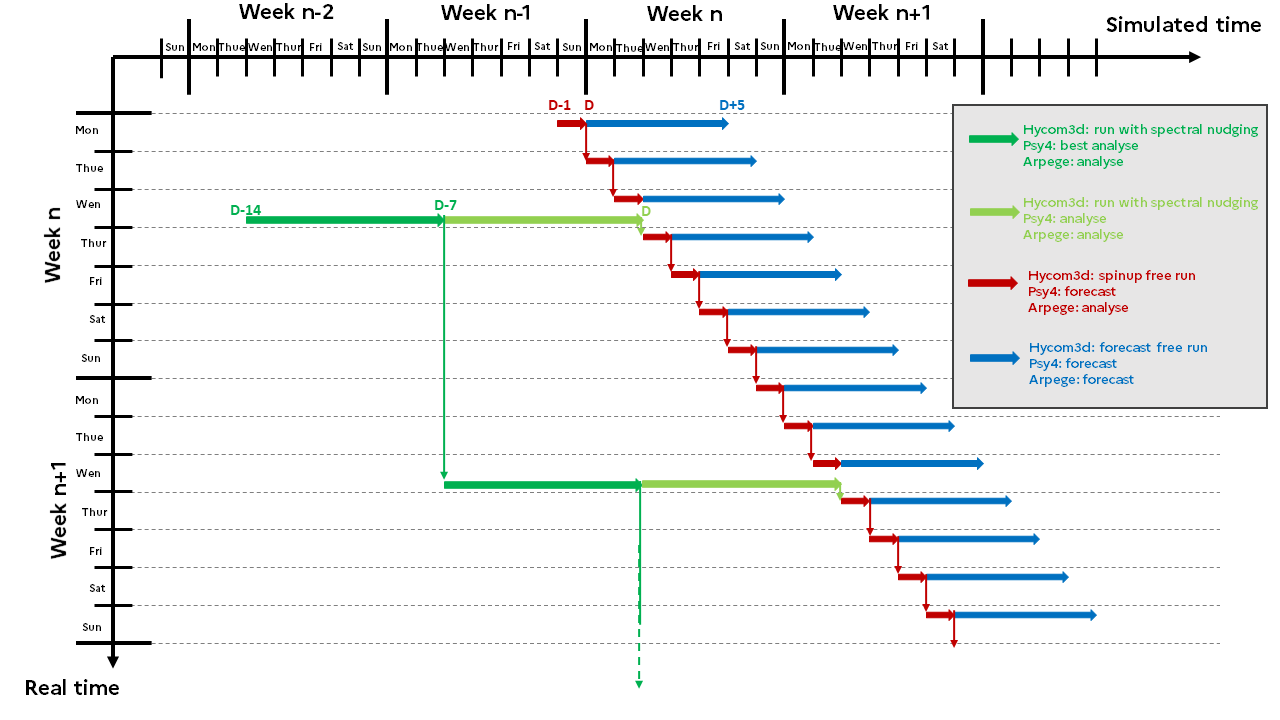

Scenario

Daily

The workflow starts at 05:40:00 UTC, and ends:

for the manga configuration at 06:10:00 UTC

for the med configuration at 07:05:00 UTC

for the indien configuration at 06:20:00 UTC

Weekly

The workflow starts at 13:00:00 UTC, and ends:

for the manga configuration at 14:50:00 UTC

for the med configuration at 18:30:00 UTC

for the indien configuration at 15:06:00 UTC

Vortex jobs, tasks & conf

Operational workflow Jobs

Execution Duration (hh:mm:ss)

Tasks

Description

Manga

Med

Indien

Fetch flow data

Daily

recextspinup_shom[conf]

00:00:30

#######

00:00:10

recextfiles_spinup

Fetch arpege, psy4 and cmems data

recextforecast_shom[conf]

00:01:10

#######

00:00:30

recextfiles_forecast

Fetch arpege, psy4 and cmems data

Weekly

recextspnudge_shom[conf]

00:05:10

#######

00:01:10

recextfiles_spnudge

Fetch arpege, psy4 and cmems data

Pre-processings

Daily

riverspinup_shom[conf]

00:00:15

00:00:16

00:00:14

run_rivers_spinup

Build rivers inflow .r files

riverforecast_shom[conf]

00:00:19

00:00:22

00:00:17

run_rivers_forecast

Build rivers inflow .r files

atmfrcspinup_shom[conf]

00:01:27

00:02:18

00:02:29

run_atmfrc_spinup

Build atmospheric fluxes .[a/b] files

atmfrcforecast_shom[conf]

00:04:11

00:06:04

00:09:28

run_atmfrc_forecast

Build atmospheric fluxes .[a/b] files

ibcspinup_shom[conf]

00:03:14

00:06:06

00:02:07

run_ibc_spinup

Build boundary conditions .[a/b] files

ibcforecast_shom[conf]

00:06:08

00:13:37

00:04:28

run_ibc_forecast

Build boundary conditions .[a/b] files

Weekly

atmfrcspnudge_shom[conf]

00:14:33

00:12:45

00:22:56

run_atmfrc_spnudge

Build atmospheric fluxes .[a/b] file

riverspnudge_shom[conf]

00:00:55

00:00:46

00:00:35

run_rivers_spnudge

Build rivers inflow .r files

ibcspnudge_shom[conf]

00:15:03

00:34:48

00:11:20

run_ibc_spnudge

Build boundary conditions .[a/b] files

Model run

Daily

modelspinup_shom[conf]

00:03:07

00:08:57

00:02:35

run_model_spinup

Spinup free model execution

modelforecast_shom[conf]

00:14:11

00:41:08

00:11:38

run_model_forecast

Forecast free model execution

Weekly

modelspnudge_shom[conf]

01:21:17

04:19:02

01:05:17

run_model_spnudge

Model execution with spectral nudging

Post-processings

Daily

postdatashomforecast_shom[conf]

00:02:37

00:05:07

#######

run_postprod_datashom_forecast

Formatting hycom3d outputs for data.shom

postsoapforecast_shom[conf]

00:08:28

00:28:19

00:16:44

run_postprod_soap_forecast

Formatting hycom3d outputs for soap

Weekly

postdatashomspnudge_shom[conf]

00:05:50

00:02:29

#######

run_postprod_datashom_spnudge

Formatting hycom3d outputs for data.shom

postsoapspnudge_shom[conf]

00:09:10

00:30:27

00:21:52

run_postprod_soap_spnudge

Formatting hycom3d outputs for soap

Vortex jobs, tasks and conf are available on the operational hpc cluster of Meteo-France at

/homech/mxpt001/vortex/oper/[vapp]/[vconf]/.Versioning of the operational tasks and conf is performed with git and the GitLab platform (see the sloop-oper project).

We also archive the operational version of the conf and tasks on the Shom network at

/usr/site/data/DOPS/STM/DTO/SLOOP_OPER/[vapp]/[vconf]/[tasks|conf]_[tag].tgz.For instance, the operational tasks of the manga configuration of the hycom3d application tagged oper_0.1.0 would be stored in

/usr/site/data/DOPS/STM/DTO/SLOOP_OPER/hycom3d/manga/tasks_oper_0.1.0.tgz.The archive is built by sloop archive.

Informations about the archives are given by

/usr/site/data/DOPS/STM/DTO/SLOOP_OPER/hycom3d/manga/info.json.Example :

{ "tasks": { "date": "2021-12-14 14:01", "by": "m_morvan", "from": "git@gitlab.com:GitShom/STM/", "version": "oper_0.1.0", "tarname": "tasks_oper_0.1.0.tgz" }, "conf": { "date": "2021-12-14 14:01", "by": "m_morvan", "from": "git@gitlab.com:GitShom/STM/", "version": "oper_0.1.0", "tarname": "conf_oper_0.1.0.tgz" }, }

Softs

Libraries

The operational version of the sloop and vortex libraries are tagged and stored on the Shom network at

/usr/site/data/DOPS/STM/DTO/SLOOP_OPER/softs/[sloop|vortex]_[tag].tar.The archive is built by sloop archive.

Informations about the archives are given by

/usr/site/data/DOPS/STM/DTO/SLOOP_OPER/softs/info.json.Example :

{ "sloop": { "date": "2021-12-14 14:01", "by": "m_morvan", "from": "git@gitlab.com:GitShom/STM/", "version": "oper_0.1.0", "tarname": "sloop_oper_0.1.0.tgz" }, "vortex": { "date": "2021-12-14 14:02", "by": "m_morvan", "from": "git@gitlab.com:GitShom/STM/", "version": "oper_1.0.1", "tarname": "vortex_oper_1.0.1.tgz" } }

Python

The sloop python environment is located on Meteo-France HPC clusters at

/homech/mxpt001/vortex/sloop-env/. Versioning of the python environment is performed with git and the GitLab platform (see the sloop-oper project). The python environment is saved in a sloop-oper-environment.yml file by running on belenos:$ /homech/mxpt001/vortex/sloop-env/bin/conda env export -n root --file sloop-oper-environment.yml

Inputs

Geoflow resources

Everyday geoflow resources are pushed in the operational products database of Meteo-France (BDPE).

Product

Model

Institution

BDPE Availability

Description

Retention period: 7 days

Arrival time

14620

arpege

Meteo-France

analysis arpege global instantaneous

14621

arpege

Meteo-France

08:30

analysis arpege North Sea-Gascogne-Mediterrannee instantaneous

14622

arpege

Meteo-France

forecast arpege global instantaneous

14623

arpege

Meteo-France

02:46

forecast arpege North Sea-Gascogne-Mediterrannee instantaneous

14680

arpege

Meteo-France

analysis arpege global cumulated

14681

arpege

Meteo-France

08:30

analysis arpege North Sea-Gascogne-Mediterrannee cumulated

14682

arpege

Meteo-France

forecast arpege global cumulated

14683

arpege

Meteo-France

03:29

forecast arpege North Sea-Gascogne-Mediterrannee cumulated

14674

psy4

MO-I

01:53

daily mercator North Sea-Gascogne-Mediterrannee

14675

psy4

MO-I

weekly mercator North Sea-Gascogne-Mediterrannee

14676

psy4

MO-I

daily mercator Arabian Sea

14677

psy4

MO-I

weekly mercator Arabian Sea

14679

obs

CMEMS

daily river observations

Input data are available in the local operational vortex cache for 15 days at

/chaine/mxpt001/vortex/mtool/cache/vortex/[vapp]/[vconf]/OPER.

Constants

The static files are stored in the GCO archive and accessible via the GCO cycles herebelow.

Configuration

GCO cycle

Manga

Med

Indien

The operational version of the constants are tagged via a cycle name by GCO (Meteo-France service) via a genv file, for instance :

CYCLE_NAME="hycom3d01_hycom3d@indien-main.01" HYCOM3D_NAMING_0_TAR="hycom3d.naming_0.02" HYCOM3D_NEST_INDIEN_0_TAR="hycom3d.nest.indien_0.01" HYCOM3D_POSTPROD_INDIEN_0_TAR="hycom3d.postprod.indien_0.02" HYCOM3D_REGIONAL_INDIEN_0_TAR="hycom3d.regional.indien_0.01" HYCOM3D_RUN_INDIEN_0_TAR="hycom3d.run.indien_0.02" HYCOM3D_SAVEFIELD_INDIEN_0_TAR="hycom3d.savefield.indien_0.03" HYCOM3D_SPLIT_INDIEN_0_TAR="hycom3d.split.indien_0.01" HYCOM3D_TIDE_INDIEN_0_TAR="hycom3d.tide.indien_0.01" MASTER_HYCOM3D_IBC_INICON_INDIEN="hycom3d01_indien-inicon-main.02.libfrt.libopt.exe" MASTER_HYCOM3D_IBC_REGRIDCDF_INDIEN="hycom3d01_indien-regridcdf-main.01.libfrt.libopt.exe" MASTER_HYCOM3D_OCEANMODEL_INDIEN="hycom3d01_indien-model-main.01.libfrt.libopt.exe" MASTER_HYCOM3D_POSTPROD_TEMPCONVERSION="hycom3d01_tempconversion-main.02.libfrt.libopt.exe" MASTER_HYCOM3D_POSTPROD_TIMEFILTER="hycom3d01_filter-main.03.libfrt.libopt.exe" MASTER_HYCOM3D_POSTPROD_VERTINTERPOLATION="hycom3d01_vertinterpolation-main.02.libfrt.libopt.exe" MASTER_HYCOM3D_SPNUDGE_DEMERLIAC="hycom3d01_demerliac-main.02.libfrt.libopt.exe" MASTER_HYCOM3D_SPNUDGE_SPECTRAL="hycom3d01_spectral-main.02.libfrt.libopt.exe"We also archive on the Shom network the namelists and executables at

/usr/site/data/DOPS/STM/DTO/SLOOP_OPER/softs/[vapp]/[vconf]/[exe|consts]_[cycle].tgz.The archive is built by sloop archive.

Informations about the archives are given by

/usr/site/data/DOPS/STM/DTO/SLOOP_OPER/softs/info.json.Example :

{ "exe": { "date": "2021-12-14 14:08", "by": "morvanm@morvanm@belenos", "from": "belenos.meteo.fr", "at": "/chaine/mxpt001/vortex/cycles/gco/tampon/", "version": "hycom3d01_hycom3d@manga-main.05", "tarname": "exe_hycom3d01_hycom3d@manga-main.05.tgz" }, "consts": { "date": "2021-12-14 14:08", "by": "morvanm@morvanm@belenos", "from": "belenos.meteo.fr", "at": "/chaine/mxpt001/vortex/cycles/gco/tampon/", "version": "hycom3d01_hycom3d@manga-main.05", "tarname": "consts_hycom3d01_hycom3d@manga-main.05.tgz" } }

Outputs

Log

The log files are stored and available on the Meteo-France HPC systems (i.e. belenos and taranis) at

/home/ch/mxpt001/oldres/[yyyymmdd]/[job]_r00.[yyyymmddHHMMSS], for instance/homech/mxpt001/oldres/20220513/postsoapspnudge_shomindien_r00.20220513125404.

Model

The model outputs and restart files are stored on the Meteo-France archive system (hendrix) at

/chaine/mxpt/mxpt001/vortex/hycom3d/[conf]/OPER/[YYYY/MM/DD]/T0000P/run_[mode], for instance/chaine/mxpt/mxpt001/vortex/hycom3d/manga/OPER/2022/05/11/T0000P/run_spinup.The specifications of the model outputs depend on the regional configuration and are summarized hereafter.

Manga

Product

Parameters

Time Step

Spatial extent

MANGA

2D

u, v, sst, sss, ssh, ubavg, vbavg

1 hour

[-15°E : 2.875°E] ; [43.0087°N : 51°N]

3D

u, v, temp, saln, h, sigma

MANGASTERNE

STERNE

ssh, h, difs, um, vm

Med

Product

Parameters

Time Step

Spatial extent

MED

3D

u, v, temp, saln, h, sigma

1 day

[-7°E : 36.2333°E] ; [30.2333°N : 45.8167°N]

2D

u, v, sst, sss, ssh, ubavg, vbavg

1 hour

MEDSTERNE

STERNE

ssh, h, difs, um, vm

2 hours

[2.0°E : 12.0°E] ; [40.75°N : 44.5°N]

MEDOC

3D

u, v, temp, saln, h

1 hour

[1.5°E : 12.5°E] ; [37.7167°N : 44.9833°N]

2D

u, v, sst, sss, ssh

MEDOR

3D

u, v, temp, saln, h

1 hour

[25.5°E : 36.2333°E] ; [30.2333°N : 37.5°N]

2D

u, v, sst, sss, ssh

MEDIO

3D

u, v, temp, saln, h

1 hour

[9.48333°E : 22.4833°E] ; [30.2333°N : 39.4833°N]

2D

u, v, sst, sss, ssh

GIBAL

3D

u, v, temp, saln, h

1 hour

[-7°E : 2.4833°E] ; [33.4833°N : 39.4833°N]

2D

u, v, sst, sss, ssh

Indien

Product

Parameters

Time Step

Spatial extent

INDIEN

2D

u, v, sst, sss, ssh, ubavg, vbavg

1 hour

[32.3°E : 77.05°E] ; [5°N : 30.1155°N]

3D

u, v, temp, saln, h, sigma

Post-production

The post-production outputs are stored on the Meteo-France archive system (hendrix). The release times are summarized in the table hereafter.

data.shom

soap

sterne

daily

weekly

daily

weekly

daily

Manga

06:03 UTC

14:44 UTC

06:09 UTC

14:50 UTC

(not oper yet)Med

06:41 UTC

18:09 UTC

07:06 UTC

18:30 UTC

(not oper yet)Indien

#########

########

06:18 UTC

15:06 UTC

#########

data.shom

The data.shom products are available at

/chaine/mxpt/mxpt001/vortex/hycom3d/[manga/med]/OPER/[YYYY/MM/DD]/T0000P/postprod/datashom_[forecast/spnudge]/. The data.shom production provides daily data from J-1 to J+5 and weekly data, on wednesday, from J-14 to J.

Production

Spatial extent

MANGA

[-15°E : 2.875°E] ; [43.0087°N : 51°N]

MEDOCNORD

[2°E : 12°E] ; [38°N : 44.5°N]

The parameters saved for data.shom are :

Type

Parameters

Time frequency

Vertical

2D

u, v, sst, sss

1 hour

surface

3D

u, v, temp, saln

25h mean

fixed depth

sterne

The sterne products are available at

/chaine/mxpt/mxpt001/vortex/hycom3d/[manga/med]/OPER/[YYYY/MM/DD]/T0000P/postprod/sterne_forecast/. The sterne production provides daily data from J-1 to J+5.

Production

Spatial extent

Time frequency

MANGASTERNE

[-15°E : 2.875°E] ; [43.0087°N : 51°N]

1 hour

MEDSTERNE

[2.0°E : 12.0°E] ; [40.75°N : 44.5°N]

2 hours

The parameters saved for data.shom are :

Type

Parameters

Vertical

2D

ssh

surface

3D

h, um, vm, difs

model

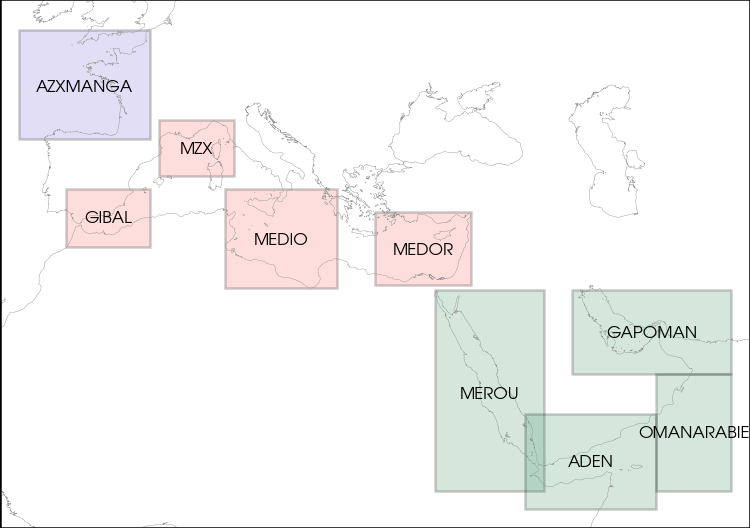

soap

The soap products are available at

/chaine/mxpt/mxpt001/vortex/hycom3d/[manga/med/indien]/OPER/[YYYY/MM/DD]/T0000P/postprod/soap_[forecast/spnudge]/. The soap production provides daily data from J-1 to J+5 and weekly data, on wednesday, from J-14 to J-7.

Production

Spatial extent

AZXMANGA

[-12°E : 2°E] ; [43°N : 51°N]

MZX

[3°E : 11°E] ; [40°N : 44.5°N]

MEDOR

[26°E : 36.23°E] ; [30.5°N : 37°N]

MEDIO

[10°E : 22°E] ; [30°N : 39°N]

GIBAL

[-7°E : 2°E] ; [34°N : 39°N]

GAPOMAN

[47°E : 64°E] ; [22°N : 31°N]

OMANARABIE

[56°E : 64°E] ; [10°N : 22°N]

ADEN

[42°E : 56°E] ; [8°N : 18°N]

MEROU

[32.35°E : 44°E] ; [10°N : 30.07°N]

The parameters saved for data.shom are :

Type

Parameters

Time

Vertical

2D

dyn (ssh)

demerliac, 25h mean

surface

3D

dyn (u, v), hydro (temp, saln)

1 hour, 25h mean

fixed depth

Pre-release

Pre-release refers to the versions of configurations we consider ready to be pushed in the operational environment.

Sources

The pre-released sources are pulled on the r&d hpc clustering system at

~/Repos_prerelease/. The sources are:

the Vortex library on the shom_sloop-prerelease branch

the Sloop library on the prerelease branch

the Vortex tasks in the

sloop-oper/{vapp}/tasksdirectory

Experiment

A prerelease@sloopoper experiment is initialised on the r&d hpc clustering system for the three configurations:

/scratch/work/morvanm/prerelease@sloopoper/hycom3d/manga

/scratch/work/morvanm/prerelease@sloopoper/hycom3d/med

/scratch/work/morvanm/prerelease@sloopoper/hycom3d/indienThe daily and weekly workflows are ran by sloop via sloop run.

daily workflow named {vconf}_forecast.cfg:

recdata=recextfiles_spinup,recextfiles_forecast pre=run_ibc_spinup,run_ibc_forecast,run_atmfrc_spinup,run_atmfrc_forecast,run_rivers_spinup,run_rivers_forecast, ana=run_model_spinup, fore=run_model_forecast, post=run_postprod_datashom_forecast,run_postprod_soap_forecast, arcdata=arcextfiles_spinup,arcextfiles_forecast,arcextfiles_daily_datashom,arcextfiles_daily_soap,weekly workflow named {vconf}_spnudge.cfg:

recdata=recextfiles_spnudge, preproc=run_ibc_spnudge,run_rivers_spnudge,run_atmfrc_spnudge, model=run_model_spnudge, postproc=run_postprod_datashom_spnudge,run_postprod_soap_spnudge, arcdata=arcextfiles_spnudge,arcextfiles_weekly_datashom,arcextfiles_weekly_soap,

Real-time testing

The pre-release configurations are testing in real time on the r&d hpc system. For each configuration, two crontab are implemented, for instance,

15 06 * * * /scratch/work/morvanm/prerelease@sloopoper/hycom3d/med/cron_med_daily.sh 15 13 * * 3 /scratch/work/morvanm/prerelease@sloopoper/hycom3d/med/cron_med_weekly.shThe bash script executed by the crontab contains the sloop run command as well as the shell environment setup. For instance, cron_med_daily.sh contains:

#!/usr/bin/bash source /etc/profile.d/modules.sh module purge module use ~/Modulefiles module load conda/sloop PATH=$PATH:$HOME/bin:$HOME/Repos_prerelease/vortex/bin:$HOME/Repos_prerelease/sloop/bin export PATH VTXPATH=$HOME/Repos_prerelease/vortex PYTHONPATH=$VTXPATH/project:$VTXPATH/site:$VTXPATH/src:$HOME/Repos_prerelease/sloop:$HOME/Repos_prerelease/xoa export PYTHONPATH RUNDATE=$(date '+%Y%m%d') cd /scratch/work/morvanm/prerelease@sloopoper/hycom3d/med export MTOOLDIR=/scratch/work/morvanm sloop run -w med_forecast --begindate $RUNDATE --ncycle 1 --freq 1

Archive

The model outputs, restart files and post-production outputs are pushed on hendrix.meteo.fr Output data have to be checked by the services in charge of the diffusion or the configuration development for instance.

Validation

In the following sub-sections, we describe a procedure to set up and run a hindcast run with the pre-released resources.

Initialisation

Load your sloop environment

Run sloop init with suitable arguments, for example:

sloop init hycom3d manga validprerelease@$LOGNAME -w $WORKDIR --sloop-env operGo to the experiment directory given by sloop, by running for example:

cd $WORKDIR/validprerelease@$LOGNAME/hycom3d/mangaAt this stage, you should carefuly fill the

conf/[vapp]_[vconf].inifile. You can either copy the corresponding file stored on belenos.meteo.fr at~morvanm/Repos_prerelease/confor catch it from a clone of sloop-oper <https://gitlab.com/GitShom/STM/sloop-oper/ with the relevant tag.Then, put in cache the pre-release static resources by running sloop constants:

sloop constants store pull

Run

Edit the workflow configuration file, named

[vapp][vconf]_[hindcast].cfg. This file has to contain :recdata = recextfiles_hindcast_spnudge, preproc = run_bc_hindcast, run_atmfrc_hindcast, run_rivers_hindcast, model = run_model_hindcast_spnudge, postproc = run_postprod_soap_hindcast_spnudge, arcextfiles_hindcast_spnudge, arcpost = arcextfiles_hindcast_soap,Submit the workflow, by running sloop run, for instance:

sloop run -w hycom3dmanga_hindcast --begindate 20211214 --ncycle 10 --res-xpid OPER --res-type nrt

What’s new ?

2022-01-06

Release scheduled on T1-2023.

Release done on not yet.

Versioning

source

tag

sloop

prerelease.2023T1.01

vortex

prerelease.2023T1.01

conf & tasks

prerelease.2023T1.01

constants

hendrix.meteo.fr

hycom3d01_hycom3d@manga-main.12@morvanm, hycom3d01_hycom3d@med-main.03@morvanm, hycom3d01_hycom3d@indien-main.08@morvanm

Details

Add the STERNE production for IRSN over MANGA and MED configurations

New fields are now computed (um, vm and difs) by hycom. The STERNE production is daily pushed on the vortex.archive.fr in

[vapp]/[vconf]/prerelease/[YYYYMMDD]T0000P/postprod/sterne_forecast.Hycom sources modification in sloop, see merge requests 76 and 77 ;

New geometry and fields added in vortex, see merge request 25 ;

The modified static files are

postprod.cfg(manga and med),blkdat_cmo_*.input(manga, med and indien) andsavefield_*.input(manga and med) ;Since all regional configuration are impacted, three hycom executables have been generated ;

The impacted tasks & conf are

run_model_spinup.py,run_postprod_sterne_forecast.py,hycom3d_manga.ini,hycom3d_med.ini,hycom3d_indien.ini.

Add the model and post-production outputs checking

Now we check the number of files generated by the model and post-production tasks, as well as the number of terms contained in each files. In case of missing files, an ascii file describing the missing files is generated and pushed on vortex.archive.fr in the same directory of task outputs.

The command sloop check promises has been implemented, see merge request 72 ;

The vortex data components have been added, see the corresponding vortex commit a27aa9… ;

The impacted tasks are

run_model_spinup.py,run_postprod_datashom_forecast.py;A

promises.cfghas been added for each regional configuration (manga, med, indien) ;

Update SOAP production

The dynamical and hydrological fields are now separated in the SOAP production.

The daily analysed data are no longer provided.

We add the MEROU area.

Sloop has been modified, see merge request 75 ;

The vortex data component have been modified, see the corresponding vortex commit a04eac… ;

The modified static file is

postprod.cfgfor each regional configuration.

Delivery

In order to make a new version of a configuration operational, Shom has to deliver materials to 3 Meteo-France services:

vortex.support

The vortex.support team is in charge of reviewing the Shom implementation in the vortex library. In order to be reviewed, a dedicated branch of the vortex library has to be pushed by Shom on the cnrm git server. The push has to be accompanied by an e-mail to vortex.support.

GCO

The constants (namelists, static binaries, executables…) are stored by the GCO service. The new cycle, genv and the files associated with, are provided on the R&D hpc cluster (belenos). An e-mail has to be sent to the GCO team with a documentation indicating the changes.

IGA

The operational set up is performed by IGA. The tasks and conf files are provided on the R&D hpc cluster (belenos). The branch release of the sloop repository is also provided on the R&D hpc cluster. An e-mail has to be sent to the IGA team.

Repro

For re-running the OPER experiment, we deploy a simili-oper installation on the R&D hpc cluster (belenos) at

/scratch/work/morvanm/oper@morvanm. The sources (vortex and sloop repositories, python installation and tasks) are stored at/home/ext/sh/csho/morvanm/Repos_oper. The sloop run command can be used.